The two alternatives to the monasterisation of the World wide web

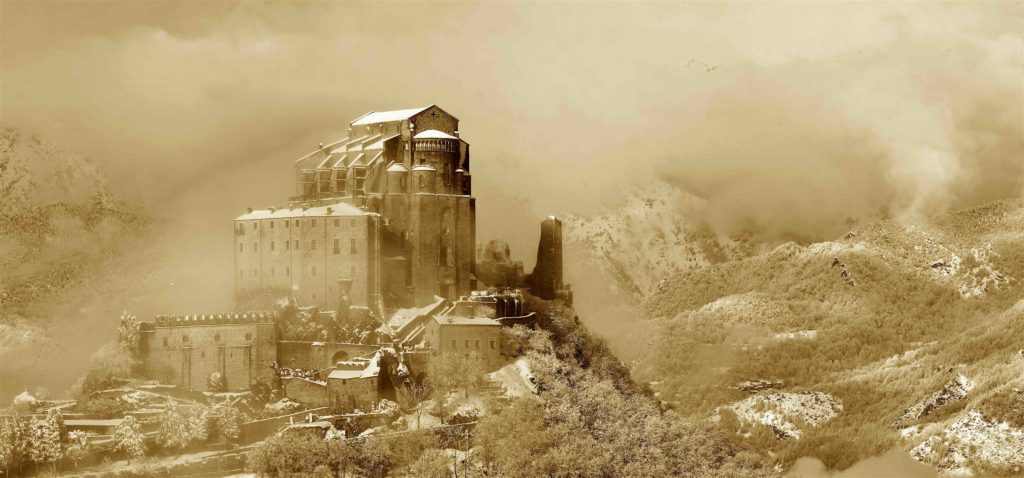

In Medieval Europe, information was physically concentrated in very few secluded libraries and archives. Powerful institutions managed them and regulated who could access what. The library of the fictional abbey that is described in Umberto Eco’s The Name of the Rose is located in a fortified tower and only the librarian knows how to navigate its mysteries. Monasteries played an essential role in preserving written information and creating new intelligence from that knowledge. But being written information a scarce resource, with the keys to libraries came also authority and power. Similarly, Internet companies are amassing information within their fortified walls. In so doing, they provide services that we now see as essential but they also contravene the two core principles of the Internet: openness and decentralisation.

What is the Internet? What is the Web?

In the aftermath of another data scandal - this time involving the largest repository of personal and social data ever seen in history — Facebook — and with the aim to explain what alternatives we might have to the monasterisation of the Web, I think it is appropriate to start with a short answer to these two basic questions.

The Internet is fundamentally two things: computers and protocols. Computers understand each other because they speak the same language as defined by shared protocols. The driver of the success of the Internet is that being part of it has no entry barriers: protocols are free (like in “free beer”) and so is admission to the club. This largely explains why we have only one Internet covering the entire planet instead of multiple internets covering small parts if it.[note]Altought we now give the unicity of the Internet for granted, an alternative scenario is as realistic as a printer that doesn’t work with your computer or a Skype account that doesn’t call a WhatsApp account.[/note] Once costs (virtually zero) and benefits (as large as the Internet) are accounted for, it is difficult to justify connecting to disconnected alternatives.

The World Wide Web is not the Internet. The Web is the disordered collection of digital documents that are unambiguously accessible via the Internet. An order is not necessary because we can still find every document following the address that each online document is uniquely associated with. And to find our way to the document, we don’t actually use any index but simply interrogate a distributed register system to get a workable route to the document. Most of the documents that we find surfing the web are HTML pages (another free and open standard) but there is technically no limit to the kind of documents that people can share; in fact, the only limits that we see are legal limits.

The Web has been designed for the Internet and in line with its core principles: open to all and decentralised. Rules exist but are limited to assure the efficient implementation of the information and communication project by defining its technological metastructure. Still, the extraordinary success of the Internet+Web project created a subordinate problem: chaos. As the amount of information increased so did the confusion of users. Protocols did efficiently solve the technological challenges of connecting machines and documents but crucially did (and still do) a very poor job in helping human users navigate the information space defined by those documents to find what’s relevant to them.

Why Google, Facebook and Amazon are for-profit?

There is no doubt of what’s the value of the services provided by the likes of Google, Facebook and Amazon. As human begins, we navigate and process information through reference and abstraction. In other words, we can’t really find valuable something that is not related to information we already possess or that can’t be abstracted into categories that we already have. For example, we generally have a very limited interest in the picture of a newborn baby if it is not the picture of the baby of somebody we know. The Internet+Web technologies can give us access to the picture of millions of newborn babies every day, but only Facebook can point us to the one picture we are really interested in seeing.

Given the clear need for such services, the question is: why if the Internet and Web technologies developed free (to use) and open (to join), the search technologies that make Google, Facebook and Amazon possible were developed instead within for-profit and walled platforms? An answer I find convincing is that the Internet and the Web are free and open because no paid for and closed alternative would have invited such a universal adoption. In other words, adoption was driven by the affordability and openness of the technologies more than their intrinsic value of use.

But why are these technologies free in the first place? Because they are relatively simple.[note]Don’t get me wrong: the vision that was necessary to develop these technologies was nothing short of extraordinary.[/note] Let’s not forget that these technologies allow only machine-to-machine communication. The first specification of the Transmission Control Protocol (still in use) was published in 1974 by three researchers. The development of the Hypertext Transfer Protocol (HTTP) was a one-man project. The machine-to-human communication is incredibly more complicated and, consequentially, its problems much more expensive to solve. In 2017, according to Bloomberg, Amazon spent 22.6 billion (with a “b”) of dollars in Research and Development, Google 16.6, Facebook 7.8. To put these figures into context, it is worth noting that the most expensive publicly-funded scientific experiment in the history of humankind in the last 10 years relied on an average annual budget of 1.1 billion dollars.

The real cost in the development of search technologies is data on human behaviour. Becuase if we can precisely predict how a machine will react after receiving a packet of bits (and we know it because we have designed the machine), we have only a vague idea of how a person will; in fact, we might better off betting on a range of possible reactions. We need behavioural data to develop search technologies and we need sophisticated sensory systems to collect them. Amazon, Google, Facebook (and every other tech company you can think of) are constantly collecting information on their users and constantly using it to improve their services and stay ahead of the competition.

The emergence of huge platform systems, which are neither free or open, respond to the necessity of continuously creating and retain human behaviour data. The development and everyday application of these search technologies need a centrally-controlled environment because only the systematic accumulation of data makes it valuable. As they stand, the Web free and open technologies are effective in allowing your laptop to receive bits from the server where what you are reading now is physically stored, but they won’t help you if need to locate the closest pharmacy. For that, you need Google, which will translate your human request “closest pharmacy” into a machine-readable request (which necessarily involves understanding the abstract and context-dependent category of “pharmacy”), find the information, and send it back to you in a human-readable format.

Is there any free and open alternative to the monasterisation of the Internet+Web?

The capabilities of platform systems that we experience every day were made possible because platforms rejected the tenets of an open and free Internet. Instead of a distributed and decentralised system, platforms decided early on for closeness and centralisation of information. Simply put, it is impossible to imagine that an intelligent system might emerge if it is not able to collect information from the entire network and make human-sense (not machine-sense) of it. But at the same time, it is very difficult to imagine how an organisation other than a for-profit corporation will ever have the resources to do that. The only solution possible seems to be a network where data are distributed across multiple computers - exactly as the Internet+Web was intended to be - but that still allows every single computer to easily and quickly interrogate the entire network without the need to physically store it, because only an actor of the size of Google would have the resources to do that.

I see two alternatives to a World Wide Web where information and intelligence are concentrated in beautiful but inaccessible monasteries. The first solution involves the creation of data banks. The second requires the widespread adoption of a different file system. And, by the way, these two alternatives are totally compatible.

Data banks

The idea is simple: to separate data consumption from data storage. This must involve the creation of intermediaries that will store data and manage access to them on behalf of the data owners. It is basically what traditional banks do with your money: they store it in an account bearing your name and lend it out based on your preferences (low risk vs high risk). Once you have an account with a data bank, you can upload into it all your stock of personal information (from holiday pictures to blood pressure measurements) and the bank will keep updating it with with the stream of data produced by your daily activities: posting on Facebook, taking a picture with your Android phone, sending a mail from Gmail, running with your iWatch.

As you signup to a new online service, let’s say Netflix, you might find it convenient to allow the service to suggest you what to watch next. To do this, you will need to give Netflix an explicit permission to access data that the Netflix’s algorithm might need: from the log of reactions to movies you saw in the past (a ‘like’ under a Facebook post or a tweet you published) to more personal information such as your age, gender and your sexual orientation. Every piece of information can be released to Netflix only if you explicitly say so. But the best warranty against possible misuse of your data is that Netflix won’t store any of your data (or a profile about you): if you produce data on Netflix (views, likes, etc.), these data must go back to your data account.

Your data bank must offer you the possibility to make public part of your data. For example, you might want to have the information that is usually part of a resume to be accessible to anyone who might be interested. Or you might want to publish information about your availability to drive somebody around between 9 pm and 10 pm on a specific day for a given price (but if you want you can allow a third-party service to negotiate the price for you). Or you might want to allow not-for-profit research organisations to access your entire medical history.

(On data banks see Chapter 10 of The master algorithm by Pedro Domingos).

Block-chaining the world file system

In this case, the idea is to introduce a new file system that will allow to safely distribute an encrypted version of your data to which you and only you retain a key. This new file system has already one implementation ambitiously called the InterPlanetary File System (IPFS). The IPFS, which is open source and free to use, works by assigning to each file a unique fingerprint, by distributing in a smart way the physical storage of each file throughout the network and by giving to the system the responsibility to maintain an index of each file (what is where). The IPFS removes the necessity of centralising the physical storage of data. The system is distributed so the availability of a file added to the system doesn’t depend on any single node of the network: this means that if a node goes offline or a region of the network is cut off from the Internet - because of a natural disaster or a political decision - access to individual files is less likely to be compromised.

The blockchain part of the idea is necessary to guarantee that the content of the file and every successive modification to it are authorised by who ‘owns’ the file (although ownership, in this case, refers to the intellectual ownership and not to the physical ownership). A blockchain is a database in the form of a growing chain of blocks, where each block is linked to its previous block by a cryptographic hash that makes the link unbreakable. The specific structure of the database makes it an ideal candidate for a distributed (peer-to-peer) system because it removes from the equation the necessity of trusting the nodes that physically store the data.

What are the benefits

- By separating the data from the services that use them to create values and services (ads, search, communication, trade, etc.), we revitalise the entire Web ecosystems by removing what prevents the next new thing to emerge. Because competition and competitive innovation are only possible if Google and every other search engine (and Facebook and every other social networking service, etc.) have access to the same data pool. In other words, Web services won’t be able to maintain their dominant position only because of the data they have accumulated or by locking in users refusing to move their data elsewhere; they will need to compete only on the quality of their services. Also, the levelling of the access-to-data field would make it possible for not-for-profit projects (e.g. open source and research projects), which obviously lacks the resources to acquire a critical mass of data points, to still create competitive tools and services.

- Data stay under the control of the data creator. The creator maintains the intellectual ownership of the data and can transparently set what is used by whom and how. Moreover, by bringing the data under the umbrella of specialised service-providers, we dramatically increase security against data breaches. It is not by chance that banks are among the safest places where to store your money: a bank that loses its clients’ money won’t stay in business for long.

We need standards and governments

A more open and more decentralised alternative to the current Age of Monasteries, let’s call it the Internet Renaissance so to logically continue with the historical metaphor, necessarily involves the definition of standards. Data can be portable between services and readable by different services only if their content is expressed in a structured way. How can I, in practice, communicate to the world that I’m happy to drive people around between 9 pm and 10 pm? I need to present the information in a way that can be understood by every software that is interested in the information. Internal standardisation is a major advantage of platforms. Horizontal communities, like the community of Wikipedia editors, have shown to be able to agree on a minimum set of standards to publish information (and Wikidata is the result of this). But governments and intergovernmental organisations (with ITU being the most obvious forum) must play a role in fostering working groups about standards and must create a regulatory framework to protect data and to protect the Internet, humanity largest and most participated repository of human knowledge and experience.